Prompt Registry

MLflow Prompt Registry is a powerful tool that streamlines prompt engineering and management in your Generative AI (GenAI) applications. It enables you to version, track, and reuse prompts across your organization, helping maintain consistency and improving collaboration in prompt development.

Key Benefits

Version Control

Track the evolution of your prompts with Git-inspired commit-based versioning and side-by-side comparison with diff highlighting. Prompt versions in MLflow are immutable, providing strong guarantees for reproducibility.

Aliasing

Build robust yet flexible deployment pipelines for prompts, allowing you to isolate prompt versions from main application code and perform tasks such as A/B testing and roll-backs with ease.

Lineage

Seamlessly integrate with MLflow's existing features such as model tracking and evaluation for end-to-end GenAI lifecycle management.

Collaboration

Share prompts across your organization with a centralized registry, enabling teams to build upon each other's work.

Getting Started

1. Create a Prompt

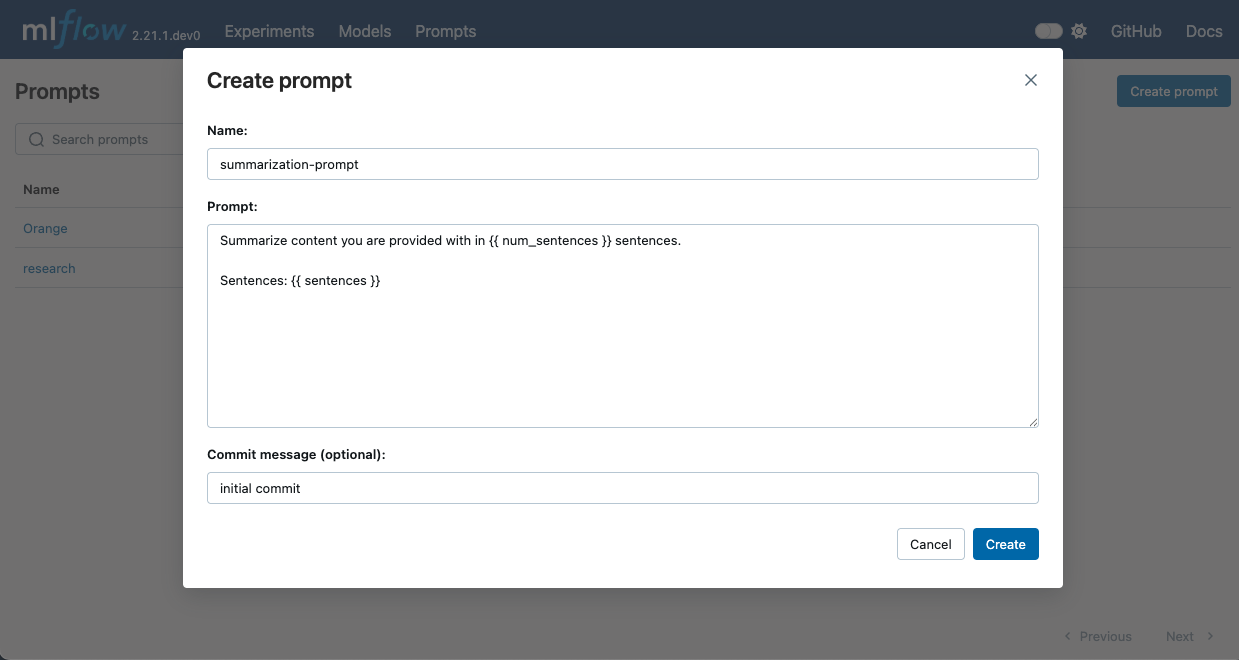

- UI

- Python

- Run

mlflow uiin your terminal to start the MLflow UI. - Navigate to the Prompts tab in the MLflow UI.

- Click on the Create Prompt button.

- Fill in the prompt details such as name, prompt template text, and commit message (optional).

- Click Create to register the prompt.

Prompt template text can contain variables in {{variable}} format. These variables can be filled with dynamic content when using the prompt in your GenAI application. MLflow also provides the to_single_brace_format() API to convert templates into single brace format for frameworks like LangChain or LlamaIndex that require single brace interpolation.

To create a new prompt using the Python API, use mlflow.genai.register_prompt() API:

import mlflow

# Use double curly braces for variables in the template

initial_template = """\

Summarize content you are provided with in {{ num_sentences }} sentences.

Sentences: {{ sentences }}

"""

# Register a new prompt

prompt = mlflow.genai.register_prompt(

name="summarization-prompt",

template=initial_template,

# Optional: Provide a commit message to describe the changes

commit_message="Initial commit",

# Optional: Set tags applies to the prompt (across versions)

tags={

"author": "author@example.com",

"task": "summarization",

"language": "en",

},

)

# The prompt object contains information about the registered prompt

print(f"Created prompt '{prompt.name}' (version {prompt.version})")

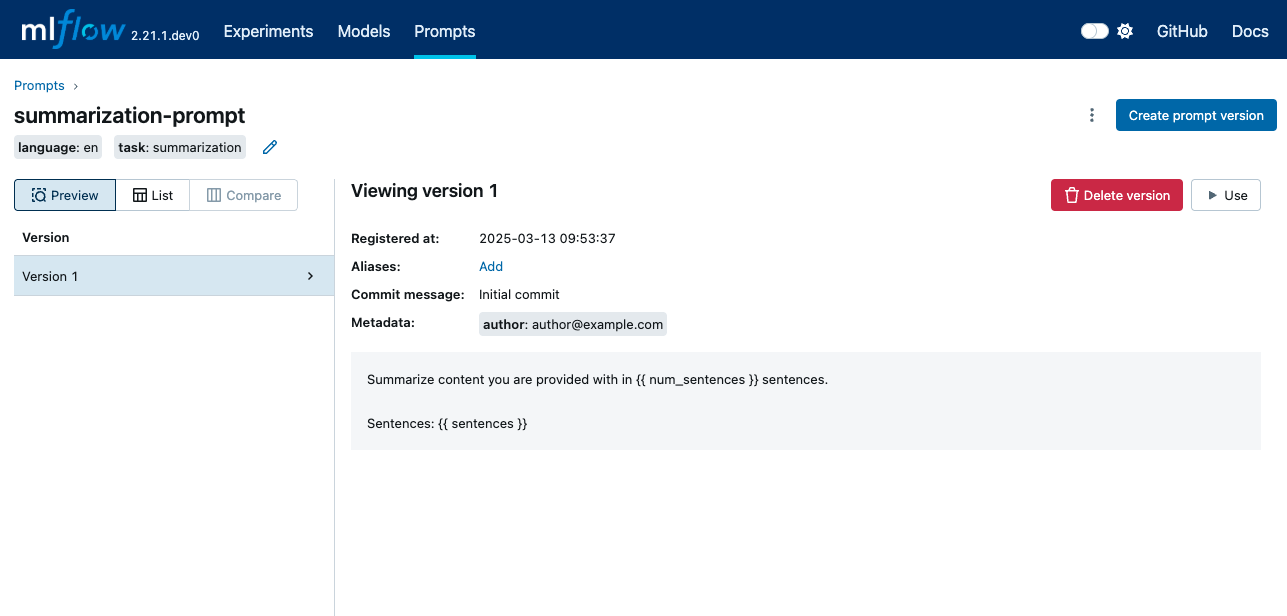

This creates a new prompt with the specified template text and metadata. The prompt is now available in the MLflow UI for further management.

2. Update the Prompt with a New Version

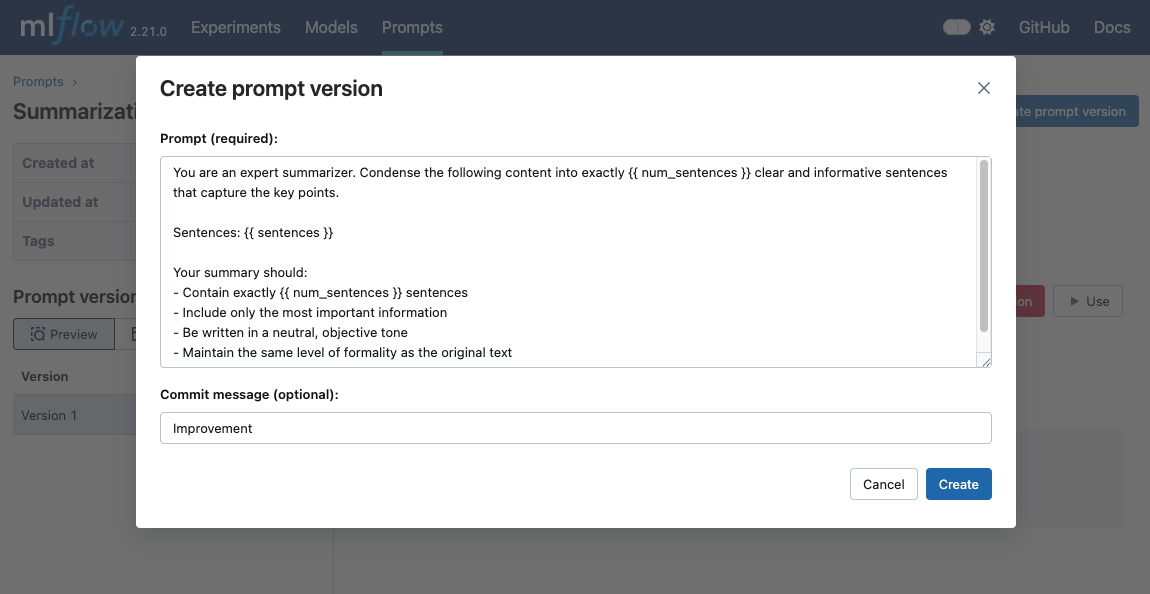

- UI

- Python

- The previous step leads to the created prompt page. (If you closed the page, navigate to the Prompts tab in the MLflow UI and click on the prompt name.)

- Click on the Create prompt Version button.

- The popup dialog is pre-filled with the existing prompt text. Modify the prompt as you wish.

- Click Create to register the new version.

To update an existing prompt with a new version, use the mlflow.genai.register_prompt() API with the existing prompt name:

import mlflow

new_template = """\

You are an expert summarizer. Condense the following content into exactly {{ num_sentences }} clear and informative sentences that capture the key points.

Sentences: {{ sentences }}

Your summary should:

- Contain exactly {{ num_sentences }} sentences

- Include only the most important information

- Be written in a neutral, objective tone

- Maintain the same level of formality as the original text

"""

# Register a new version of an existing prompt

updated_prompt = mlflow.genai.register_prompt(

name="summarization-prompt", # Specify the existing prompt name

template=new_template,

commit_message="Improvement",

tags={

"author": "author@example.com",

},

)

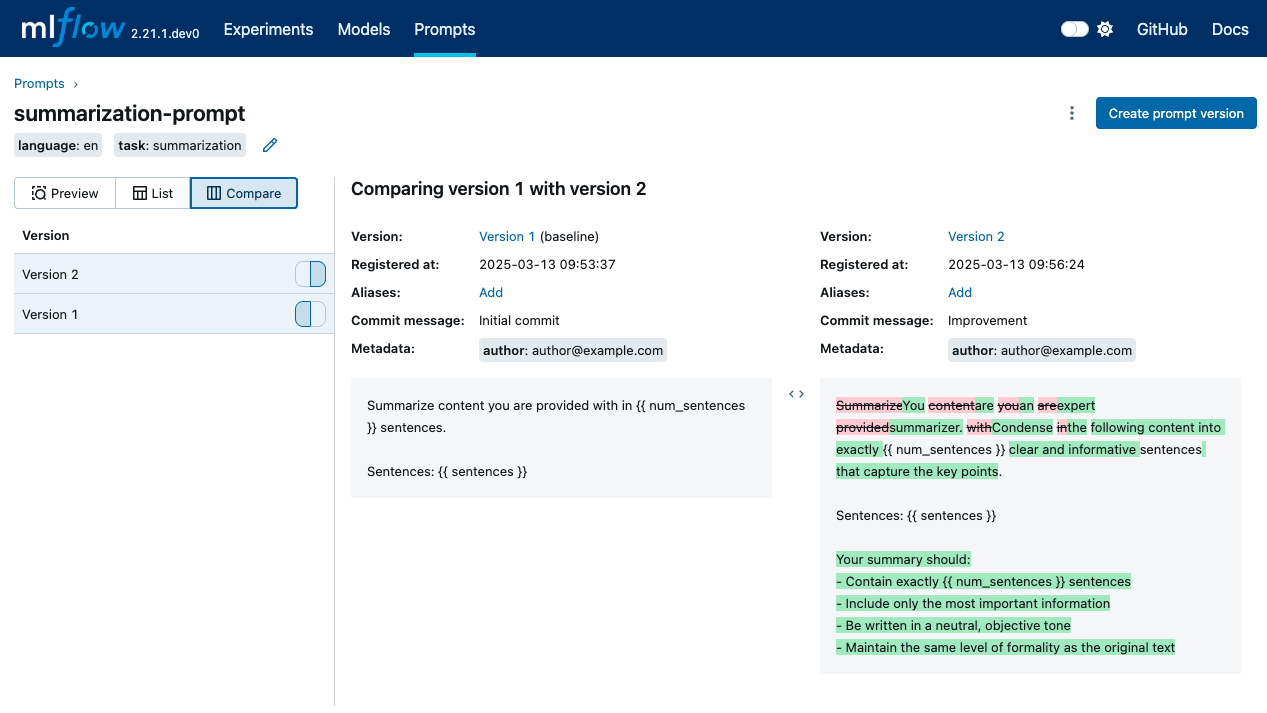

3. Compare the Prompt Versions

Once you have multiple versions of a prompt, you can compare them to understand the changes between versions. To compare prompt versions in the MLflow UI, click on the Compare tab in the prompt details page:

4. Load and Use the Prompt

To use a prompt in your GenAI application, you can load it with the mlflow.genai.load_prompt() API and fill in the variables using the mlflow.entities.Prompt.format() method of the prompt object:

import mlflow

import openai

target_text = """

MLflow is an open source platform for managing the end-to-end machine learning lifecycle.

It tackles four primary functions in the ML lifecycle: Tracking experiments, packaging ML

code for reuse, managing and deploying models, and providing a central model registry.

MLflow currently offers these functions as four components: MLflow Tracking,

MLflow Projects, MLflow Models, and MLflow Registry.

"""

# Load the prompt

prompt = mlflow.genai.load_prompt("prompts:/summarization-prompt/2")

# Use the prompt with an LLM

client = openai.OpenAI()

response = client.chat.completions.create(

messages=[

{

"role": "user",

"content": prompt.format(num_sentences=1, sentences=target_text),

}

],

model="gpt-4o-mini",

)

print(response.choices[0].message.content)

5. Search Prompts

You can discover prompts by name, tag or other registry fields:

import mlflow

# Fluent API: returns a flat list of all matching prompts

prompts = mlflow.genai.search_prompts(filter_string="task='summarization'")

print(f"Found {len(prompts)} prompts")

# For pagination control, use the client API:

from mlflow.tracking import MlflowClient

client = MlflowClient()

all_prompts = []

token = None

while True:

page = client.search_prompts(

filter_string="task='summarization'",

max_results=50,

page_token=token,

)

all_prompts.extend(page)

token = page.token

if not token:

break

print(f"Total prompts across pages: {len(all_prompts)}")

Prompt Object

The Prompt object is the core entity in MLflow Prompt Registry. It represents a versioned template text that can contain variables for dynamic content.

Key attributes of a Prompt object:

Name: A unique identifier for the prompt.Template: The text of the prompt, which can include variables in{{variable}}format.Version: A sequential number representing the revision of the prompt.Commit Message: A description of the changes made in the prompt version, similar to Git commit messages.Tags: Optional key-value pairs assigned at the prompt version for categorization and filtering. For example, you may add tags for project name, language, etc, which apply to all versions of the prompt.Alias: An mutable named reference to the prompt. For example, you can create an alias namedproductionto refer to the version used in your production system. See Aliases for more details.

FAQ

Q: How do I delete a prompt version?

A: You can delete a prompt version using the MLflow UI or Python API:

import mlflow

# Delete a prompt version

client = mlflow.MlflowClient()

client.delete_prompt_version("summarization-prompt", version=2)

To avoid accidental deletion, you can only delete one version at a time via API. If you delete the all versions of a prompt, the prompt itself will be deleted.

Q: Can I update the prompt template of an existing prompt version?

A: No, prompt versions are immutable once created. To update a prompt, create a new version with the desired changes.

Q: Can I use prompt templates with frameworks like LangChain or LlamaIndex?

A: Yes, you can load prompts from MLflow and use them with any framework. For example, the following example demonstrates how to use a prompt registered in MLflow with LangChain. Also refer to Logging Prompts with LangChain for more details.

import mlflow

from langchain.prompts import PromptTemplate

# Load prompt from MLflow

prompt = mlflow.genai.load_prompt("question_answering")

# Convert the prompt to single brace format for LangChain (MLflow uses double braces),

# using the `to_single_brace_format` method.

langchain_prompt = PromptTemplate.from_template(prompt.to_single_brace_format())

print(langchain_prompt.input_variables)

# Output: ['num_sentences', 'sentences']

Q: Is Prompt Registry integrated with the Prompt Engineering UI?

A. Direct integration between the Prompt Registry and the Prompt Engineering UI is coming soon. In the meantime, you can iterate on prompt template in the Prompt Engineering UI and register the final version in the Prompt Registry by manually copying the prompt template.