LLM / GenAI

LLMs (Large Language Models) have become essential in machine learning, enabling tasks like natural language understanding and code generation. However, fully leveraging their potential often involves complex processes of managing many moving pieces such as prompts, LLM providers, frameworks, knowledge base, tools, and more.

Expand to See More about Key Challenges in LLM / GenAI Development

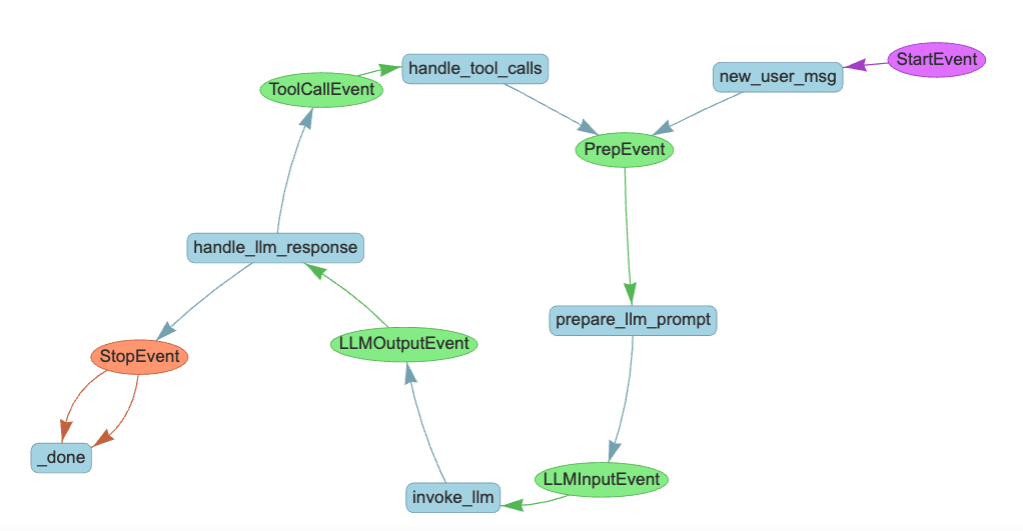

Complexity in Compound AI Systems

LLMs are often integral parts of larger, compound AI systems that incorporate multiple models, tools, prompts, and other components. With the rise of autonomous agents, the system's control flow becomes more dynamic and complex. Effectively managing such systems requires well-defined processes and specialized tools to handle their intricacies.

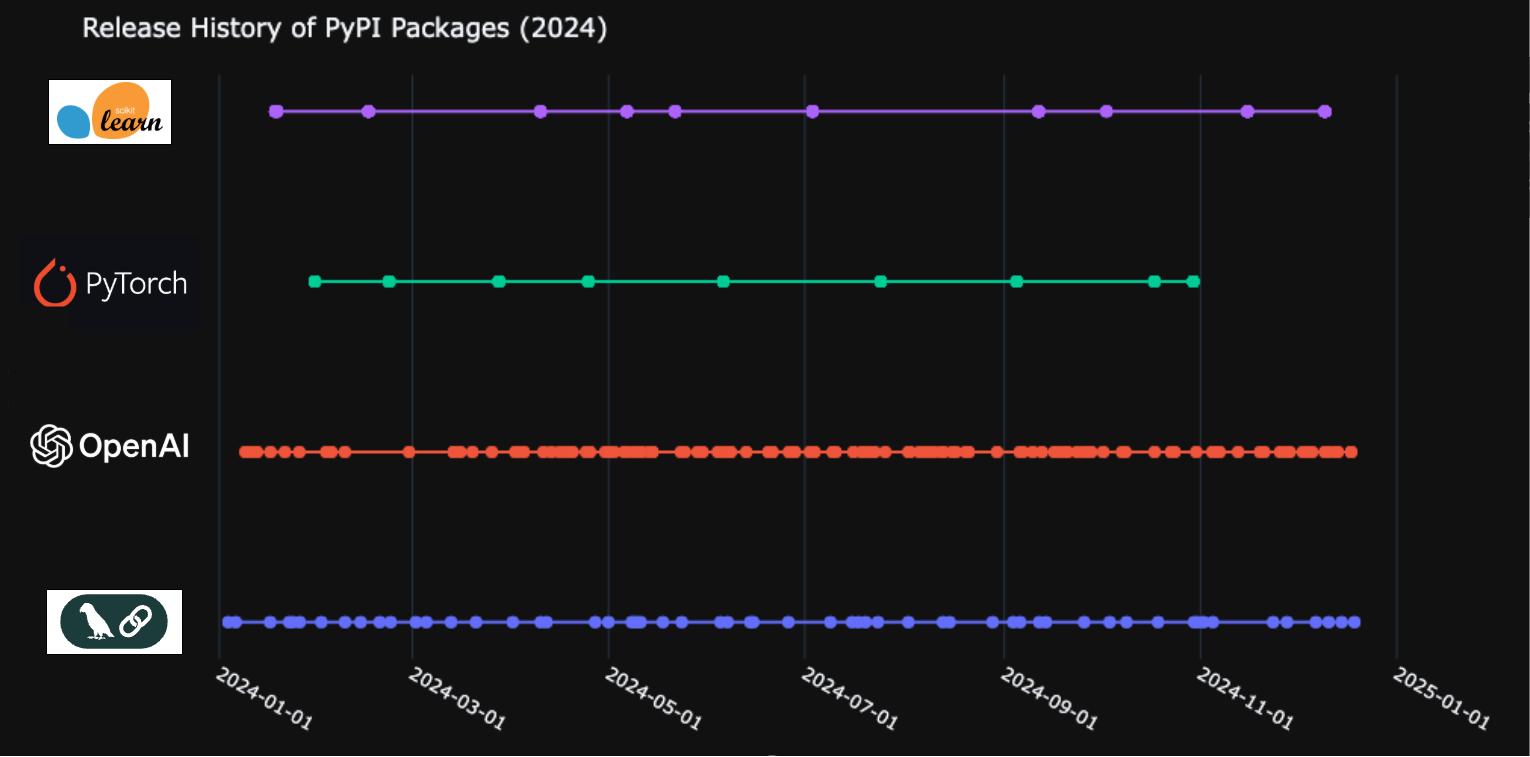

Rapidly Evolving Industry

The GenAI industry is evolving at a fast pace, with new models, tools, and libraries emerging daily. Keeping track of versions and managing dependencies is crucial to maintaining the stability and reproducibility of AI systems.

The following diagram illustrates the high release frequency of some popular GenAI libraries; LangChain and Openai, compared to those of classical ML and DL. Without keeping track of the versions and dependencies, it can be challenging to maintain the stability and reproducibility of AI systems.

Debugging Challenges

Debugging LLM-based applications is particularly difficult due to the compound nature of these systems and the high level of abstraction in many frameworks. Gaining a clear understanding of how LLMs and their interacting components behave can be a daunting task without the right tools and techniques.

Quality Assurance

Ensuring the quality of machine learning systems has always been a challenge. With LLMs, the models’ complexity and the dynamic nature of tasks make it even harder to assess and guarantee quality. Robust QA processes are essential to ensure reliability and performance.

Production Monitoring

As LLM-based applications become more dynamic, monitoring their performance post-deployment is increasingly critical to business success. Unlike traditional software monitoring, machine learning monitoring requires specialized tools to track model behavior and performance in real-time.

MLflow's Support for LLMs aims to alleviate these challenges by introducing a suite of features and tools designed with the end-user in mind.

Why Choose MLflow?

-

🪽 Free and Open - MLflow is open source and FREE. Avoid vendor lock-in and secure your ML assets on your own choice of infrastructure. You can invest in the most critical business goals without worrying about the cost of the MLOps platform.

-

🛠️ Native Cloud Integration - MLflow is not only self-hosting, but also offered managed service on Amazon SageMaker, Azure ML, Databricks, and more. This allows you to getting started quickly and scale easily within your existing cloud infrastructure.

-

🤝 15+ GenAI Framework Support - MLflow integrates with more than a dozen GenAI libraries, including OpenAI, LangChain, LlamaIndex, DSPy, and more. This allows flexibility in choosing the right tool for your use case.

-

🔄 End-to-End - MLflow is designed for managing the end-to-end machine learning lifecycle, eliminating the complexity of managing multiple tools and moving assets between them.

-

🌍 Domain Agnostic - Real world problems are not always solved by GenAI alone. MLflow provides a unified platform for managing traditional ML, deep learning, and GenAI models, making it a versatile tool for all your ML needs.

-

👥 Community - MLflow boasts a vibrant Open Source community as a part of the Linux Foundation. With 19,000+ GitHub Stars and 15MM+ monthly downloads, MLflow is a trusted standard in the MLOps/LLMOps ecosystem.

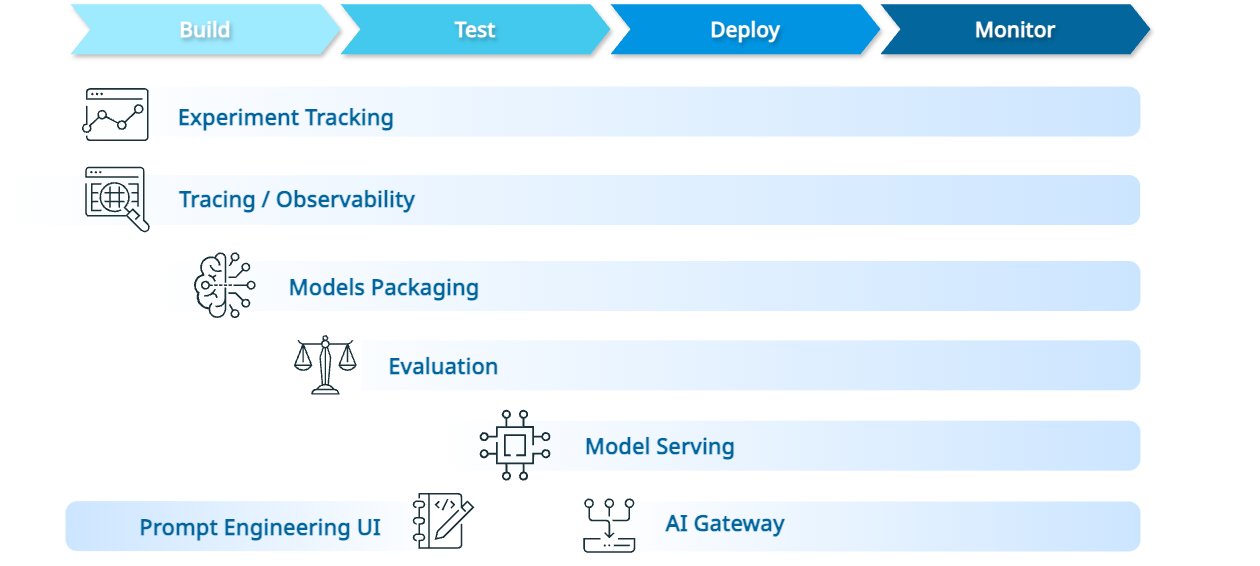

How MLflow Solves LLMOps Challenges

MLflow offers a comprehensive suite of tools and features to simplify the development, deployment, and management of LLMs. Here are some of the key features that make MLflow the go-to platform for LLM development:

Experiment Tracking

Experiment Tracking is a core fundamental feature of the MLflow platform, allowing you to organize many assets and artifacts during your LLM/GenAI projects, such as models, prompts, traces, metrics, in a single place. ➡︎ Learn more

Key Benefit of Experiment Tracking

-

Single Source of Truth: MLflow serves as a centralized location for storing and managing all your LLM assets, including models, prompts, and traces, ensuring that you have a single source of truth for your projects.

-

Better Collaboration: Experiment Tracking allows you to share your work with your team members, enabling them to reproduce your results and provide feedback.

-

Lineage Tracking: MLflow captures the lineage of your LLM assets throughout the different stages of the project, allowing you to trace the lineage of your models, prompts, and other artifacts.

-

Comparative Analysis: MLflow enables you to compare different versions of your LLM models, prompts, and other assets, helping you make informed decisions and improve the quality of your LLM applications.

-

Governance and Compliance: With the central repository of machine learning assets in your organization, MLflow helps you maintain governance and compliance standards, ensuring that your important assets are accessible only to authorized users.

![]()

Tracing / Observability

MLflow Tracing ensures LLM observability in your GenAI applications by capturing LLM calls and other important details, such as document retrieval, data queries and tool calls, allowing you to monitor and get deep insights into the inner workings of your applications. ➡︎ Learn more

Key Benefit of MLflow Tracing

-

Debugging: Traces provide a detailed view for what happens beneath the abstraction layer, helping you quickly identify and resolve issues in your LLM applications. With the native integration with Jupyter Notebook, MLflow offers a seamless debugging experience.

-

Inspect Quality: After evaluating your models or agents with MLflow Evaluation, you can analyze the auto-generated traces to understand the behavior of your LLMs and make informed decisions.

-

Production Monitoring: Traces are essential for monitoring the performance of your LLMs in production. MLflow Tracing allows you to identify bottlenecks and performance issues in production, enabling you to take corrective actions and continuously optimize your applications.

-

OpenTelemetry: MLflow Traces are compatible with OpenTelemetry, an industry-standard observability framework, allowing you to integrate with popular observability tools like Prometheus, Grafana, and Jaeger for advanced monitoring and analysis.

-

Framework Support: MLflow Tracing supports 15+ GenAI libraries, including OpenAI, LangChain, LlamaIndex, DSPy, Anthropic, Amazon Bedrock, and more. With just adding one line of code, you can start tracing your LLM applications built with these libraries.

Model Packaging

MLflow's model packaging empowers you to manage moving pieces of GenAI systems, including prompts, LLM parameters, fine-tuned weights, along with frozen dependency versions. This allows you to manage experiments with high reproduceability.

Key Benefit of Model Packaging in MLflow

-

Reproducibility: MLflow ensures that all dependencies are captured and frozen during model packaging, ensuring that your models can be reproduced consistently across different environments.

-

Versioning: MLflow models are versioned and registered to the MLflow Model Registry before production deployment, allowing you to keep track of fast-evolving models and assets with ease.

-

Native Support for Popular Packages: Standardized interfaces for tasks like saving, logging, and managing inference configurations.

-

Unified prediction APIs: MLflow models can be loaded as a convenient

PyFuncwrapper that exposes a consistentpredict()API, regardless of the underlying library. This simplified the process of evaluation, deployment, and production use of GenAI systems. -

Model Signature: MLflow's model signature feature allows you to define the input, optional parameters, and output schema of your model and additional parameters, making it easier to understand how to interface with the model and share it with others.

MLflow provides native integrations for model packaging with major GenAI libraries. Is your favorite library not in the list below? No worries, you can package arbitrary model/agent using the MLflow's PythonModel framework. Click the icon below to learn more about each integration.

Evaluation

MLflow's LLM Evaluation is designed to simplify the evaluation process, offering a streamlined approach to compare foundational models, prompts, and compound AI systems. ➡︎ Learn more

Key Benefit of MLflow LLM Evaluation

-

Simplified Evaluation: Leverage MLflow's

mlflow.evaluate()API and built-in harnesses to test your GenAI models with ease. -

LLM-as-a-Judge: MLflow provides built-in LLM-as-a-Judge metrics with flexible configuration for judge LLM providers, allowing you to plug-in complex evaluation criteria into automated evaluation process and to evaluate in bulk.

-

Customizable Metrics: Beyond the provided metrics, MLflow supports a plugin-style for custom scoring, enhancing the evaluation's flexibility.

-

Comparative Analysis: Effortlessly compare foundational models, providers, and prompts to make informed decisions.

-

Deep Insights: Traces are automatically generated for evaluation runs, allowing you to get deeper insights on the evaluation results and fix quality issues with confidence.

Model Serving

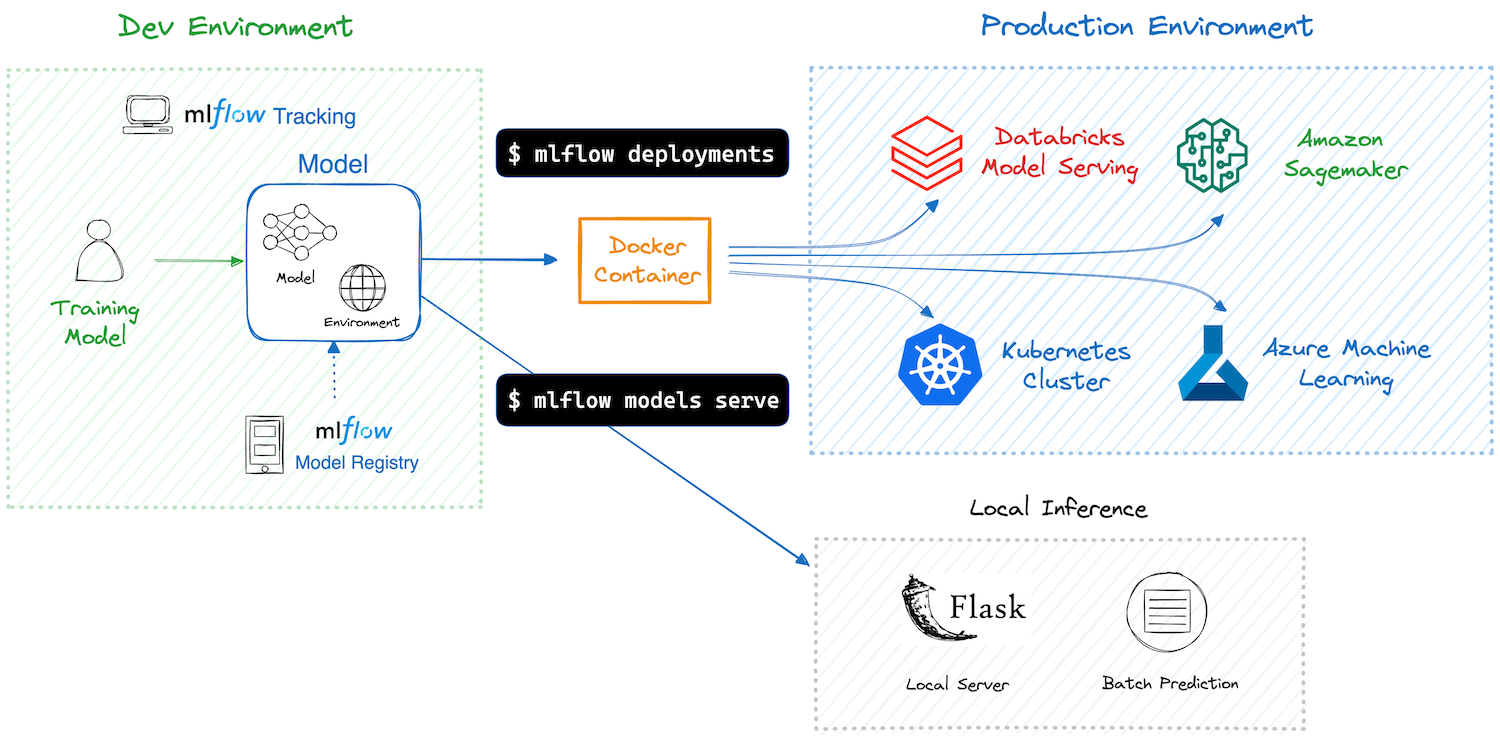

MLflow models, once packaged, can be deployed easily to different targets including Kubernetes clusters, cloud providers (Amazon SageMaker, Azure Machine Learning), Databricks, and any serving infrastructure that is based on Docker containers. ➡︎ Learn more

Key Benefit of MLflow Model Serving

- Effortless Deployment: MLflow provides a simple interface for deploying models to various targets, eliminating the need to write boilerplate code.

- Dependency and Environment Management: MLflow ensures that the deployment environment mirrors the training environment, capturing all dependencies. This guarantees that models run consistently, regardless of where they're deployed.

- Packaging Models and Code: With MLflow, not just the model, but any supplementary code and configurations are packaged along with the deployment container. This ensures that the model can be executed seamlessly without any missing components.

- Avoid Vendor Lock-in: MLflow provides a standard format for packaging models and unified APIs for deployment. You can easily switch between deployment targets without having to rewrite your code.

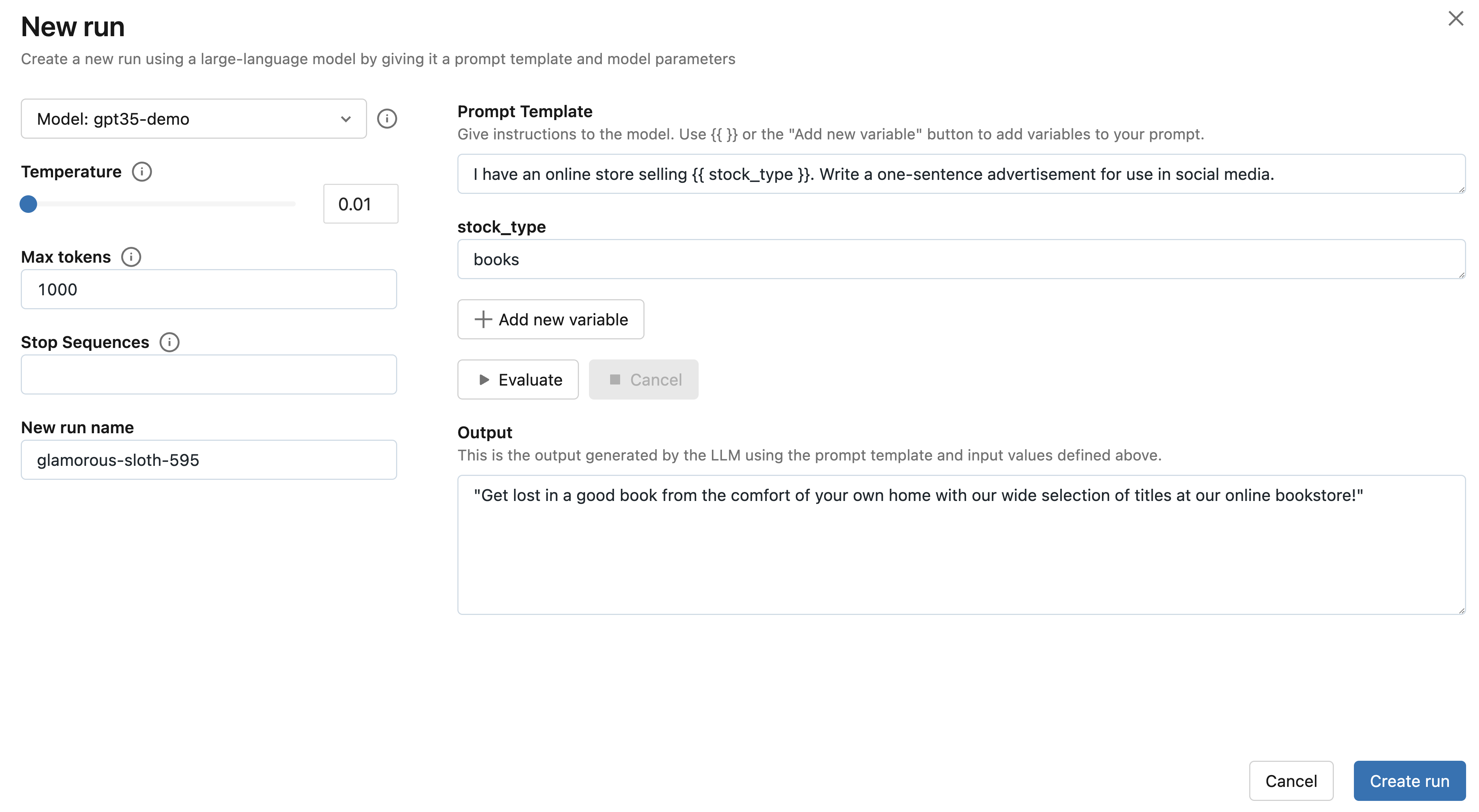

Prompt Engineering UI

MLflow Prompt Engineering UI is a no-code playground for crafting and refining prompts for LLMs, integrated with MLflow's tracking and evaluation framework. ➡︎ Learn more

Key Benefit of MLflow Prompt Engineering UI

-

Iterative Development: Streamlined process for trial and error without the overwhelming complexity.

-

UI-Based Prototyping: Prototype, iterate, and refine prompts without diving deep into code.

-

Accessible Engineering: Makes prompt engineering more user-friendly, speeding up experimentation.

-

Optimized Configurations: Quickly hone in on the best model configurations for tasks like question answering or document summarization.

-

Transparent Tracking: Every model iteration and configuration is meticulously tracked. Ensures reproducibility and transparency in your development process.

MLflow AI Gateway

Serving as a unified interface, MLflow AI Gateway simplifies interactions with multiple LLM providers and your own MLflow models. ➡︎ Learn more

Key Benefits of the MLflow AI Gateway

-

Unified Endpoint: No more juggling between multiple provider APIs.

-

Simplified Integrations: One-time setup, no repeated complex integrations.

-

Secure Credential Management: Centralized storage prevents scattered API keys. No hardcoding or user-handled keys.

-

Consistent API Experience: Uniform, easy-to-use REST API across all providers.

-

Seamless Provider Swapping: Swap providers without touching your code and zero downtime.