New Features

LangGraph, the GenAI Agent authoring framework from LangChain, is now natively supported in MLflow using the Models from Code feature.

AutoGen, a multi-turn agent framework from Microsoft, now has integrated automatic tracing integration with MLflow.

LlamaIndex, the popular RAG and Agent authoring framework now has native support within MLflow for application logging and full support for tracing.

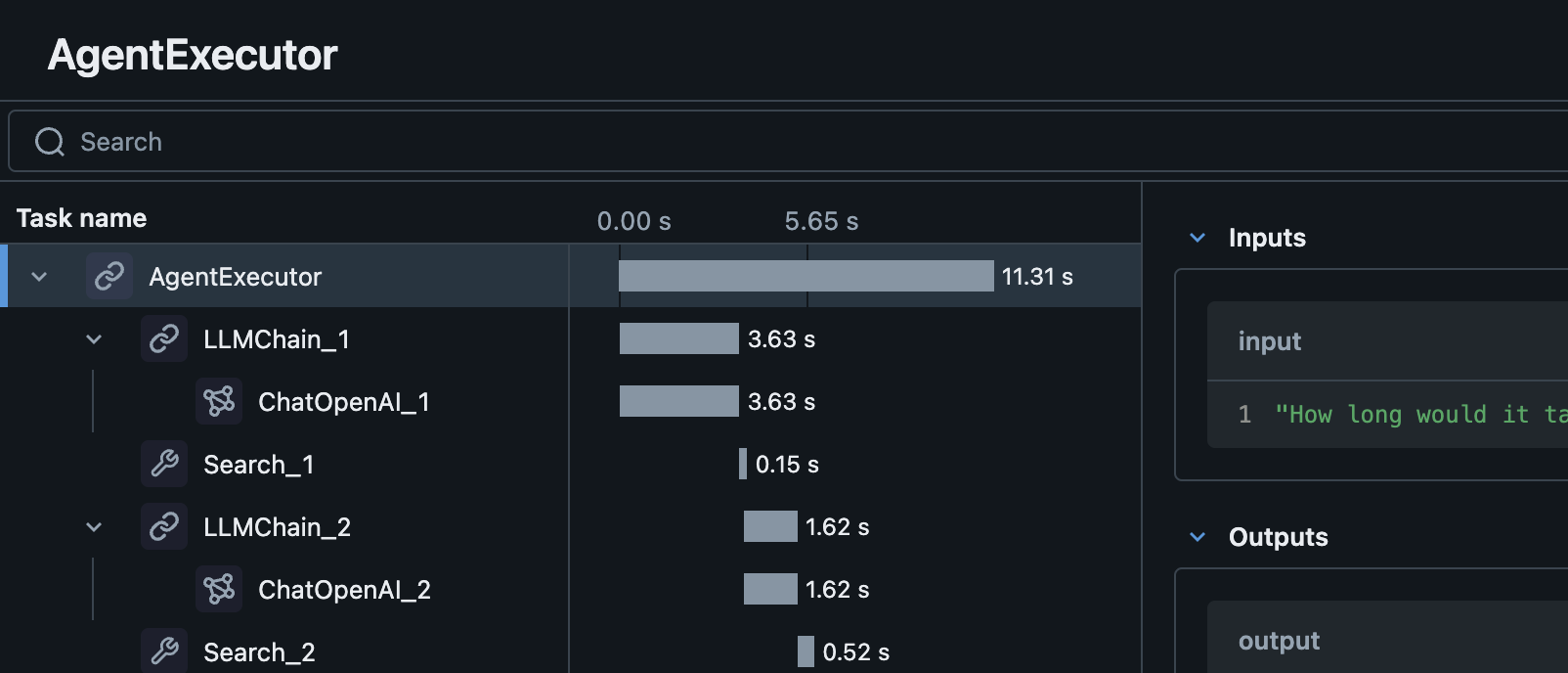

MLflow Tracing is powerful tool designed to enhance your ability to monitor, analyze, and debug GenAI applications by allowing you to inspect the intermediate outputs generated as your application handles a request.

MLflow AI Gateway now has an integration with Unity Catalog, allowing you to leverage registered functions as tools for enhancing your chat application.

Autologging support has now been added for the OpenAI model flavor. With this feature, MLflow will automatically log a model upon calling the OpenAI API.

The infer_code_path option when logging a model will determine which additional code modules are needed, ensuring the consistency between the training environment and production.

The MLflow evaluate API now supports fully customizable system prompts to create entirely novel evaluation metrics for GenAI use cases.

LangChain models and custom Python Models now support a predict_stream API, allowing for generator return types for streaming outputs.

The LangChain flavor in MLflow now supports defining a model as a code file to simplify logging and loading of LangChain models.

MLflow now supports asynchronous artifact logging, allowing for faster and more efficient logging of models with many artifacts.

The transformers flavor has received standardization support for embedding models.

Embedding models now return a standard llm/v1/embeddings output format to conform to OpenAI embedding response structures.

The transformers flavor in MLflow has gotten a significant feature overhaul.

- All supported pipeline types can now be logged without restriction

- Pipelines using foundation models can now be logged without copying the large model weights

MLflow now natively supports PEFT (Parameter-Efficient Fine-Tuning) models in the Transformers flavor. PEFT unlocks significantly more efficient model fine-tuning processes such as LoRA, QLoRA, and Prompt Tuning. Check out the new QLoRA fine-tuning tutorial to learn how to build your own cutting-edge models with MLflow and PEFT!

OpenAI-compatible chat models are now easier than ever to build in MLflow! ChatModel is a new Pyfunc subclass that makes it easy to deploy and serve chat models with MLflow.

Check out the new tutorial on building an OpenAI-compatible chat model using TinyLlama-1.1B-Chat!

We've listened to your feedback and have put in a huge amount of new UI features designed to empower and simplify the process of evaluating DL model training runs. Be sure to upgrade your tracking server and benefit from all of the new UI enhancements today!

When performing training of Deep Learning models with PyTorch Lightning or Tensorflow with Keras, model checkpoint saving is enabled, allowing for state storage during long-running training events and the ability to resume if an issue is encountered during training.

The MLflow AI Gateway can now accept Mistral AI endpoints. Give their models a try today!

You can now log and deploy models in the new Keras 3 format, allowing you to work with TensorFlow, Torch, or JAX models with a new high-level, easy-to-use suite of APIs.

We've updated flavors that interact with the OpenAI SDK, bringing full support for the API changes with the 1.x release.

MLflow has a new homepage that has been completely modernized. Check it out today!

Autologging support for LangChain is now available. Try it out the next time that you're building a Generative AI application with Langchain!

Complex input types for model signatures are now supported with native support of Array and Object types.