Version Tracking for GenAI Applications

MLflow's LoggedModel provides a central, versioned representation of your entire GenAI application, including its code, configurations, and associated artifacts like evaluation results and traces. This enables you to effectively manage the lifecycle of complex GenAI systems, ensuring reproducibility, facilitating debugging, and enabling confident deployment of the best-performing application versions.

This page introduces the core concepts of version tracking with LoggedModel. Subsequent pages in this section will detail specific methods for creating, managing, and utilizing these application versions.

Why Version Your GenAI Application?

GenAI applications are composite systems with many moving parts: code (agent logic, tools), configurations (LLM parameters, retrieval settings), and data. Iterating on these components without systematic versioning leads to significant challenges. MLflow LoggedModel helps you overcome these by addressing:

-

Reproducibility: Ensures you can recreate any previous application state.

- Challenge: Without versioning, it's hard to know which combination of code and configurations produced a specific result, making it difficult to reproduce successes or failures.

- Solution:

LoggedModelcaptures or links to the exact code (e.g., Git commit hash) and configurations used for a specific version, ensuring you can always reconstruct it.

-

Debugging Regressions: Simplifies pinpointing changes that cause issues.

- Challenge: When application quality drops, identifying which change (in code, configurations, etc.) caused the regression can be a time-consuming and frustrating process.

- Solution: By tracking

LoggedModelversions, you can easily compare a problematic version against a known good version. This involves examining differences in their linked code (via commit hashes), configurations, evaluation results, and traces to isolate the changes that led to the regression.

-

Objective Comparison and Deployment: Enables data-driven decisions for selecting the best version.

- Challenge: Choosing which application version to deploy can be subjective without a clear way to compare performance based on consistent metrics.

- Solution:

LoggedModelversions can be systematically evaluated (e.g., usingmlflow.genai.evaluate()). This allows you to compare metrics like quality scores, cost, and latency side-by-side, ensuring you deploy the objectively best version.

-

Auditability and Governance: Provides a clear record of what was deployed and when.

- Challenge: For compliance or when investigating production incidents, you need to know the exact state of the application that was running at a specific time.

- Solution: Each

LoggedModelversion serves as an auditable record, linking to the specific code and configurations, and can be associated with traces from its execution.

How LoggedModel Works for Versioning

A LoggedModel in MLflow is not just for traditional machine learning models; it's adapted to be the core of GenAI application versioning. Each distinct state of your application that you want to evaluate, deploy, or refer back to can be captured as a new version of a LoggedModel.

Key Characteristics:

- Central Versioned Entity: Represents a specific version of your GenAI application or a significant, independently versionable component.

- Captures Application State: A

LoggedModelversion records a particular configuration of code, parameters, and other dependencies. - Flexible Code Management:

- Metadata Hub (Primary): Most commonly,

LoggedModellinks to externally managed code (e.g., via a Git commit hash), acting as a metadata record for that code version along with its associated configurations and MLflow entities (evaluations, traces). - Packaged Artifacts (Optional): For specific deployment needs (like Databricks Model Serving),

LoggedModelcan also bundle the application code and dependencies directly.

- Metadata Hub (Primary): Most commonly,

- Lifecycle Tracking: The history of

LoggedModelversions allows you to track the application's evolution, compare performance, and manage its lifecycle from development to production.

Quick Start Example: Versioning an Agent's Configuration

To use the mlflow.set_active_model() functionality demonstrated below for version tracking, MLflow 3 is required. You can install it using:

pip install --upgrade "mlflow>=3.1"

To connect for logging to Databricks-hosted MLflow Tracking, use pip install --upgrade "mlflow[databricks]>=3.1" instead.

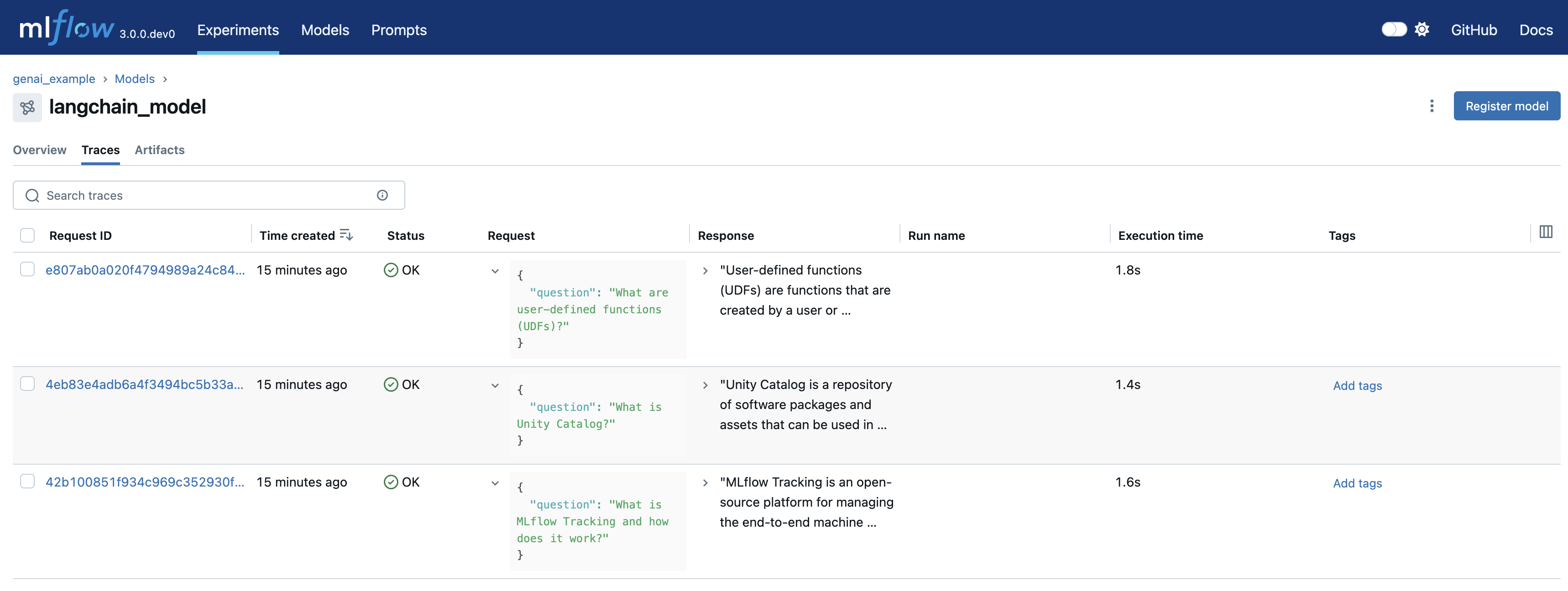

Here's how to quickly version your GenAI agent and link its traces, which might be generated during interactive development or evaluation. This example uses mlflow.set_active_model() with the current git commit hash to establish a unique version context, ensuring that any generated traces are automatically associated with this specific code version.

import mlflow

import openai

import subprocess

# Configure OpenAI API Key (replace with your actual key)

os.environ["OPENAI_API_KEY"] = "your-api-key-here"

# Configure MLflow Tracking

mlflow.set_experiment("my-genai-app")

# Get current git commit hash

git_commit = (

subprocess.check_output(["git", "rev-parse", "HEAD"]).decode("ascii").strip()[:8]

)

# Define your application version using the git commit

app_name = "customer_support_agent"

logged_model_name = f"{app_name}-{git_commit}"

# Set the active model context - traces will be linked to this

mlflow.set_active_model(name=logged_model_name)

# Enable autologging for OpenAI, which automatically logs traces

mlflow.openai.autolog()

# Define and test your agent code - traces are automatically linked

client = openai.OpenAI()

questions = [

"How do I reset my password?",

"What are your business hours?",

"Can I get a refund for my last order?",

"Where can I find the user manual for product model number 15869?",

"I'm having trouble with the payment page, can you help?",

]

for question in questions:

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": question}],

temperature=0.7,

max_tokens=1000,

)

In this example:

mlflow.openai.autolog()captures traces from OpenAI calls and automatically associates them with the active model context.- The git commit hash is used as part of the model name to uniquely identify this version of the application.

mlflow.set_active_model()establishes that subsequent traces should be linked to this specific version.- Any OpenAI calls made after setting the active model will have their traces automatically linked to this

LoggedModelversion.

This provides a lightweight way to version your application and link traces to specific code commits.

Next Steps

Now that you understand why versioning your GenAI application is crucial and how LoggedModel facilitates this, you can explore how to implement it:

- Track Application Versions with MLflow: Learn the primary method for creating and managing

LoggedModelversions when your application code is managed externally.