Build with MLflow GenAI

Learn how to get started with MLflow for building production-ready GenAI applications.

Get Started

Build your first GenAI app with MLflow

Environment Setup

Install and configure MLflow

API Reference

Complete API documentation

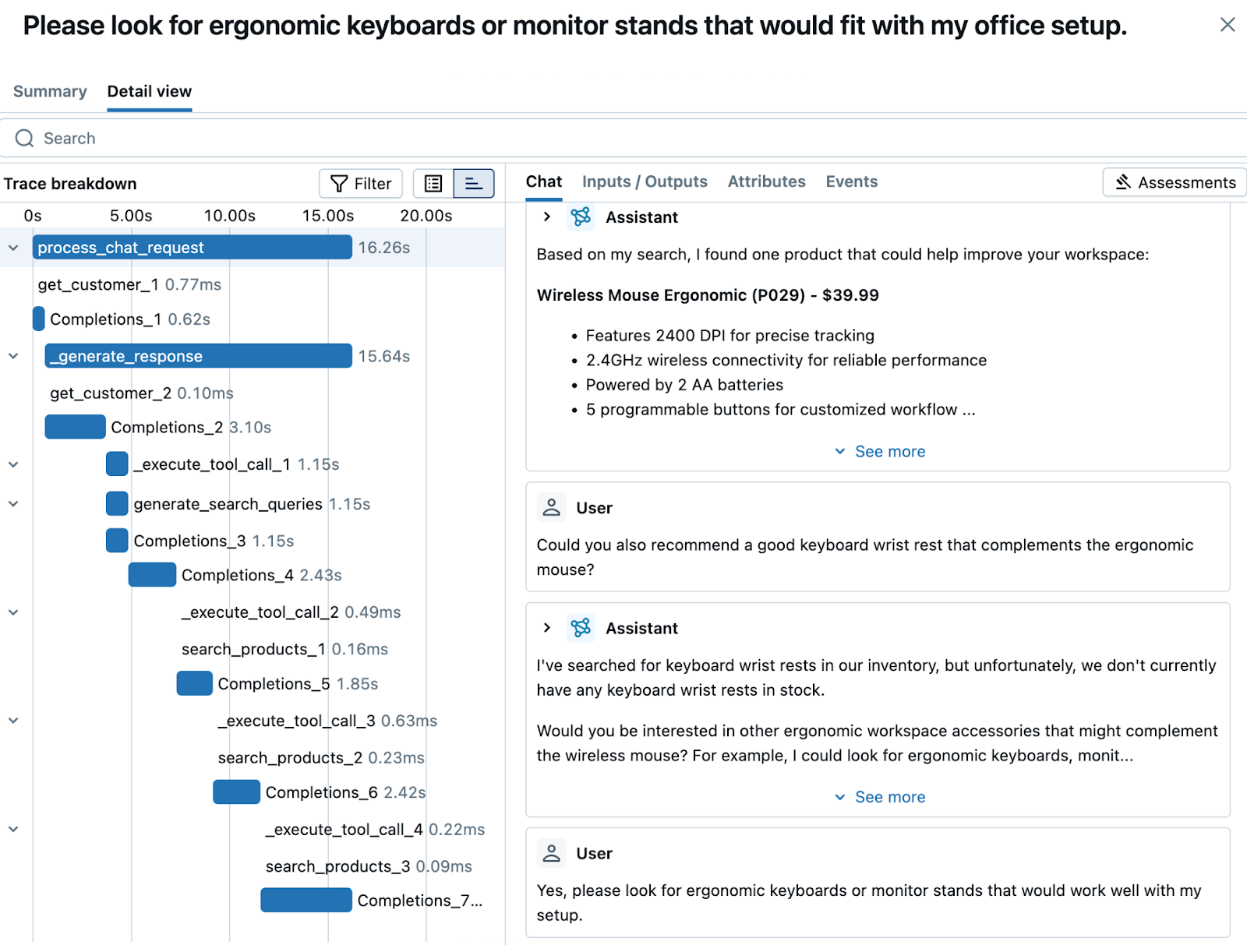

Tracing & Debug

Observe AI application flows

Evaluation

Test and improve AI quality

Prompt Engineering

Design and version prompts

Deploy & Serve

Production deployment guide

Classical ML

Traditional ML workflows

Integrations

Framework connections

Why choose MLflow?

Open Source

Join thousands of teams building GenAI with MLflow. As part of the Linux Foundation, MLflow ensures your AI infrastructure remains open and vendor-neutral.

Production-Ready Platform

Deploy anywhere with confidence. From local servers to cloud platforms, MLflow handles the complexity of GenAI deployment, monitoring, and optimization.

End-to-End Lifecycle

Manage the complete GenAI journey from experimentation to production. Track prompts, evaluate quality, deploy models, and monitor performance in one platform.

Framework Integration

Use any GenAI framework or model provider. With 20+ native integrations and extensible APIs, MLflow adapts to your tech stack, not the other way around.

Complete Observability

See inside every AI decision with comprehensive tracing that captures prompts, retrievals, tool calls, and model responses. Debug complex workflows with confidence.

Automated Quality Assurance

Stop manual testing with LLM judges and custom metrics. Systematically evaluate every change to ensure consistent improvements in your AI applications.

Running Anywhere

MLflow can be used in a variety of environments, including your local environment, on-premises clusters, cloud platforms, and managed services. Being an open-source platform, MLflow is vendor-neutral; no matter where you are doing machine learning, you have access to the MLflow's core capabilities sets such as tracking, evaluation, observability, and more.