Tracing LangGraph🦜🕸️

LangGraph is an open-source library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows.

MLflow Tracing provides automatic tracing capability for LangGraph, as a extension of its LangChain integration. By enabling auto-tracing for LangChain by calling the mlflow.langchain.autolog() function, MLflow will

automatically capture the graph execution into a trace and log it to the active MLflow Experiment.

import mlflow

mlflow.langchain.autolog()

MLflow LangGraph integration is not only about tracing. MLflow offers full tracking experience for LangGraph, including model tracking, dependency management, and evaluation. Please checkout the MLflow LangChain Flavor to learn more!

Example Usage

Running the following code will generate a trace for the graph as shown in the above video clip.

from typing import Literal

import mlflow

from langchain_core.messages import AIMessage, ToolCall

from langchain_core.outputs import ChatGeneration, ChatResult

from langchain_core.tools import tool

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import create_react_agent

# Enabling tracing for LangGraph (LangChain)

mlflow.langchain.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_tracking_uri("http://localhost:5000")

mlflow.set_experiment("LangGraph")

@tool

def get_weather(city: Literal["nyc", "sf"]):

"""Use this to get weather information."""

if city == "nyc":

return "It might be cloudy in nyc"

elif city == "sf":

return "It's always sunny in sf"

llm = ChatOpenAI(model="gpt-4o-mini")

tools = [get_weather]

graph = create_react_agent(llm, tools)

# Invoke the graph

result = graph.invoke(

{"messages": [{"role": "user", "content": "what is the weather in sf?"}]}

)

Token Usage Tracking

MLflow >= 3.1.0 supports token usage tracking for LangGraph. The token usage for each LLM call during a graph invocation will be logged in the mlflow.chat.tokenUsage span attribute, and the total usage in the entire trace will be logged in the mlflow.trace.tokenUsage metadata field.

import json

import mlflow

mlflow.langchain.autolog()

# Execute the agent graph defined in the previous example

graph.invoke({"messages": [{"role": "user", "content": "what is the weather in sf?"}]})

# Get the trace object just created

last_trace_id = mlflow.get_last_active_trace_id()

trace = mlflow.get_trace(trace_id=last_trace_id)

# Print the token usage

total_usage = trace.info.token_usage

print("== Total token usage: ==")

print(f" Input tokens: {total_usage['input_tokens']}")

print(f" Output tokens: {total_usage['output_tokens']}")

print(f" Total tokens: {total_usage['total_tokens']}")

# Print the token usage for each LLM call

print("\n== Token usage for each LLM call: ==")

for span in trace.data.spans:

if usage := span.get_attribute("mlflow.chat.tokenUsage"):

print(f"{span.name}:")

print(f" Input tokens: {usage['input_tokens']}")

print(f" Output tokens: {usage['output_tokens']}")

print(f" Total tokens: {usage['total_tokens']}")

== Total token usage: ==

Input tokens: 149

Output tokens: 135

Total tokens: 284

== Token usage for each LLM call: ==

ChatOpenAI_1:

Input tokens: 58

Output tokens: 87

Total tokens: 145

ChatOpenAI_2:

Input tokens: 91

Output tokens: 48

Total tokens: 139

Adding spans within a node or a tool

By combining auto-tracing with the manual tracing APIs, you can add child spans inside a node or tool, to get more detailed insights for the step.

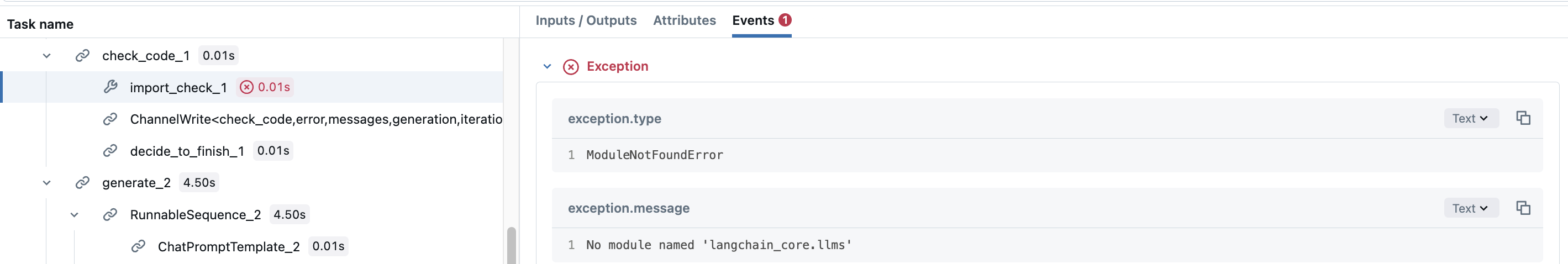

Let's take LangGraph's Code Assistant tutorial for example. The check_code node actually consists of two different validations for the generated code. You may want to add span for each validation to see which validation were executed. To do so, simply create manual spans inside the node function.

def code_check(state: GraphState):

# State

messages = state["messages"]

code_solution = state["generation"]

iterations = state["iterations"]

# Get solution components

imports = code_solution.imports

code = code_solution.code

# Check imports

try:

# Create a child span manually with mlflow.start_span() API

with mlflow.start_span(name="import_check", span_type=SpanType.TOOL) as span:

span.set_inputs(imports)

exec(imports)

span.set_outputs("ok")

except Exception as e:

error_message = [("user", f"Your solution failed the import test: {e}")]

messages += error_message

return {

"generation": code_solution,

"messages": messages,

"iterations": iterations,

"error": "yes",

}

# Check execution

try:

code = imports + "\n" + code

with mlflow.start_span(name="execution_check", span_type=SpanType.TOOL) as span:

span.set_inputs(code)

exec(code)

span.set_outputs("ok")

except Exception as e:

error_message = [("user", f"Your solution failed the code execution test: {e}")]

messages += error_message

return {

"generation": code_solution,

"messages": messages,

"iterations": iterations,

"error": "yes",

}

# No errors

return {

"generation": code_solution,

"messages": messages,

"iterations": iterations,

"error": "no",

}

This way, the span for the check_code node will have child spans, which record whether the each validation fails or not, with their exception details.

Disable auto-tracing

Auto tracing for LangGraph can be disabled globally by calling mlflow.langchain.autolog(disable=True) or mlflow.autolog(disable=True).