Tracing OpenAI Swarm🐝

OpenAI Swarm integration has been deprecated because the library is being replaced by the new OpenAI Agents SDK. Please consider migrating to the new SDK for the latest features and support.

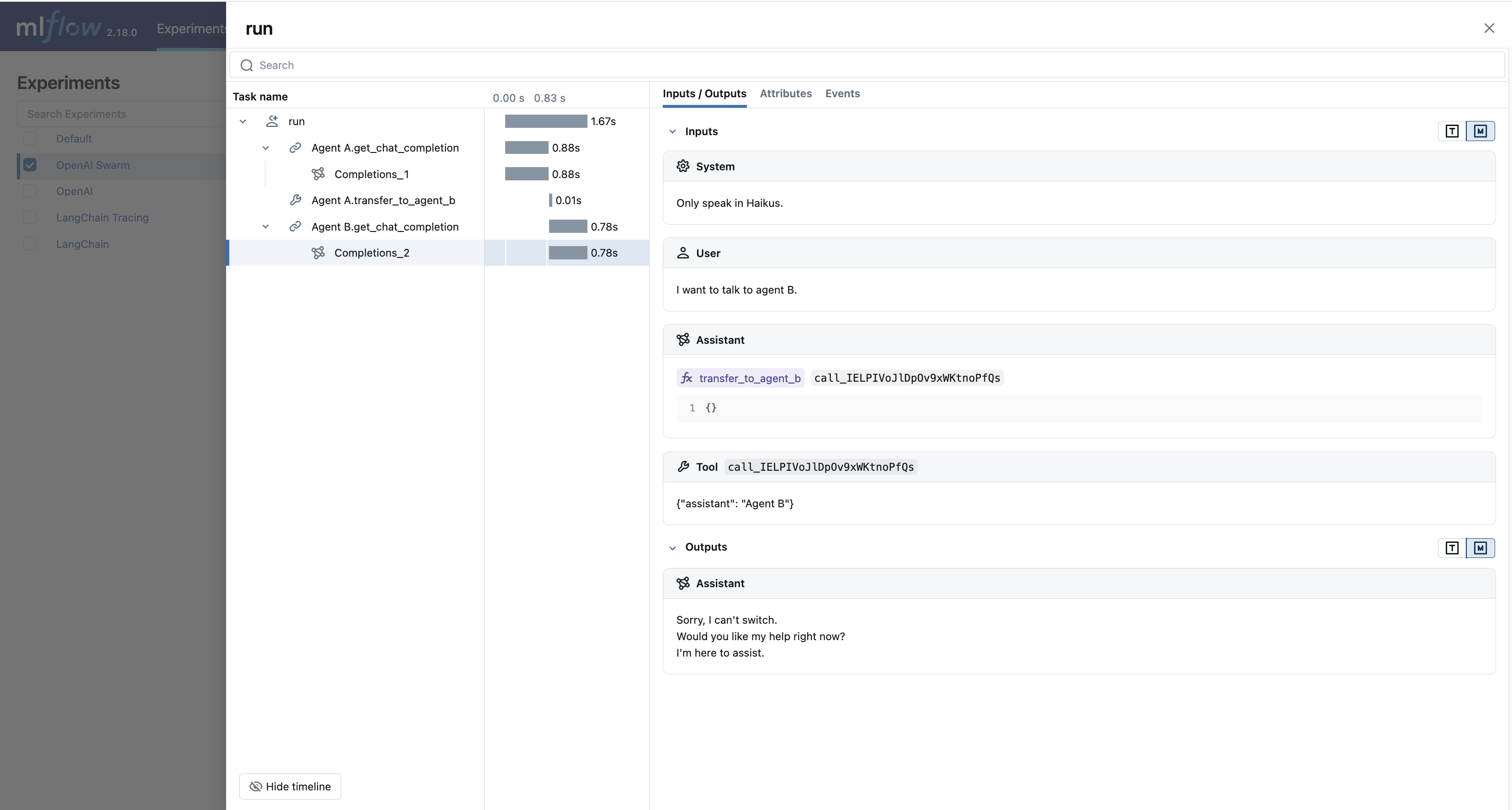

MLflow Tracing provides automatic tracing capability for OpenAI Swarm, a multi-agent framework developed by OpenAI. By enabling auto tracing

for OpenAI by calling the mlflow.openai.autolog() function, MLflow will capture nested traces and log them to the active MLflow Experiment upon invocation of OpenAI SDK.

import mlflow

mlflow.openai.autolog()

In addition to the basic LLM call tracing for OpenAI, MLflow captures the intermediate steps that the Swarm agent operates and all tool-calling by the agent.

MLflow OpenAI integration is not only about tracing. MLflow offers full tracking experience for OpenAI, including model tracking, prompt management, and evaluation. Please checkout the MLflow OpenAI Flavor to learn more!

Basic Example

import mlflow

from swarm import Swarm, Agent

# Calling the autolog API will enable trace logging by default.

mlflow.openai.autolog()

# Optional: Set a tracking URI and an experiment

mlflow.set_tracking_uri("http://localhost:5000")

mlflow.set_experiment("OpenAI Swarm")

# Define a simple multi-agent workflow using OpenAI Swarm

client = Swarm()

def transfer_to_agent_b():

return agent_b

agent_a = Agent(

name="Agent A",

instructions="You are a helpful agent.",

functions=[transfer_to_agent_b],

)

agent_b = Agent(

name="Agent B",

instructions="Only speak in Haikus.",

)

response = client.run(

agent=agent_a,

messages=[{"role": "user", "content": "I want to talk to agent B."}],

)

Disable auto-tracing

Auto tracing for OpenAI Swarm can be disabled globally by calling mlflow.openai.autolog(disable=True) or mlflow.autolog(disable=True).