MLflow Sentence-Transformers Flavor

Attention

The sentence-transformers flavor is under active development and is marked as Experimental. Public APIs are subject to change,

and new features may be added as the flavor evolves.

Introduction

Sentence-Transformers is a groundbreaking Python library that specializes in producing high-quality, semantically rich embeddings for sentences and paragraphs. Developed as an extension of the well-known Transformers library by 🤗 Hugging Face, Sentence-Transformers is tailored for tasks requiring a deep understanding of sentence-level context. This library is essential for NLP applications such as semantic search, text clustering, and similarity assessment.

Leveraging pre-trained models like BERT, RoBERTa, and DistilBERT, which are fine-tuned for sentence embeddings, Sentence-Transformers simplifies the process of generating meaningful vector representations of text. The library stands out for its simplicity, efficiency, and the quality of embeddings it produces.

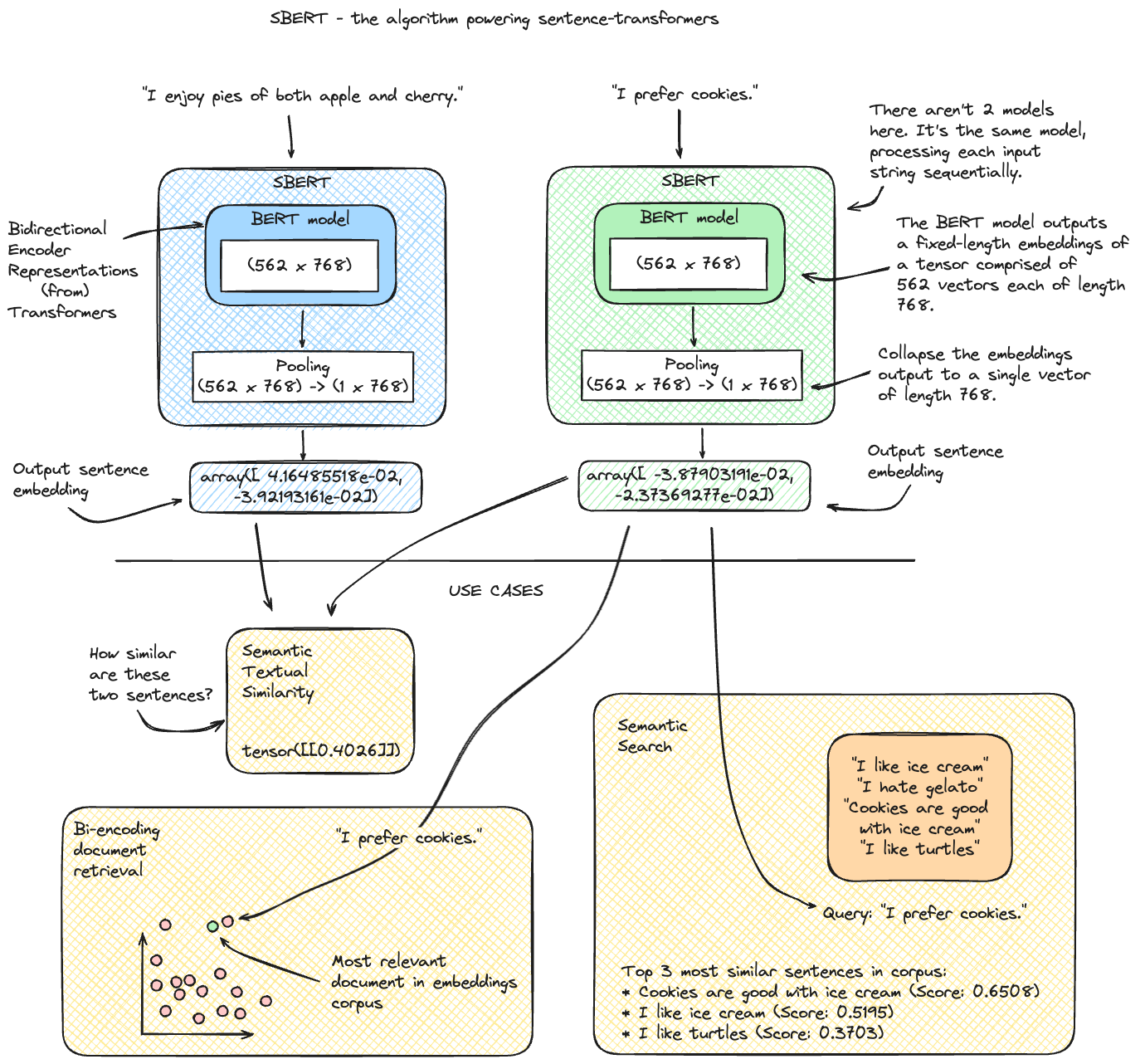

The library features a number of powerful high-level utility functions for performing common follow-on tasks with sentence embeddings. These include:

Semantic Textual Similarity: Assessing the semantic similarity between two sentences.

Semantic Search: Searching for the most semantically similar sentences in a corpus for a given query.

Clustering: Grouping similar sentences together.

Information Retrieval: Finding the most relevant sentences for a given query via document retrieval and ranking.

Paraphrase Mining: Finding text entries that have similar (or identical) meaning in a large corpus of text.

What makes this Library so Special?

Let’s take a look at a very basic representation of how the Sentence-Transformers library works and what you can do with it!

Integrating Sentence-Transformers with MLflow, a platform dedicated to streamlining the entire machine learning lifecycle, enhances the experiment tracking and deployment capabilities for these specialized NLP models. MLflow’s support for Sentence-Transformers enables practitioners to effectively manage experiments, track different model versions, and deploy models for various NLP tasks with ease.

Sentence-Transformers offers:

High-Quality Sentence Embeddings: Efficient generation of sentence embeddings that capture the contextual and semantic nuances of language.

Pre-Trained Model Availability: Access to a diverse range of pre-trained models fine-tuned for sentence embedding tasks, streamlining the process of embedding generation.

Ease of Use: Simplified API, making it accessible for both NLP experts and newcomers.

Custom Training and Fine-Tuning: Flexibility to fine-tune models on specific datasets or train new models from scratch for tailored NLP solutions.

With MLflow’s Sentence-Transformers flavor, users benefit from:

Streamlined Experiment Tracking: Easily log parameters, metrics, and sentence embedding models during the training and fine-tuning process.

Hassle-Free Deployment: Deploy sentence embedding models for various applications with straightforward API calls.

Broad Model Compatibility: Support for a range of sentence embedding models from the Sentence-Transformers library, ensuring access to the latest in embedding technology.

Whether you’re working on semantic text similarity, clustering, or information retrieval, MLflow’s integration with Sentence-Transformers provides a robust and efficient pathway for incorporating advanced sentence-level understanding into your applications.

Features

With MLflow’s Sentence-Transformers flavor, users can:

Save and log Sentence-Transformer models within MLflow with the respective APIs:

mlflow.sentence_transformers.save_model()andmlflow.sentence_transformers.log_model().Track detailed experiments, including parameters, metrics, and artifacts associated with fine tuning runs.

Deploy sentence embedding models for practical applications.

Utilize the

mlflow.pyfunc.PythonModelflavor for generic Python function inference, enabling complex and powerful custom ML solutions.

What can you do with Sentence Transformers and MLflow?

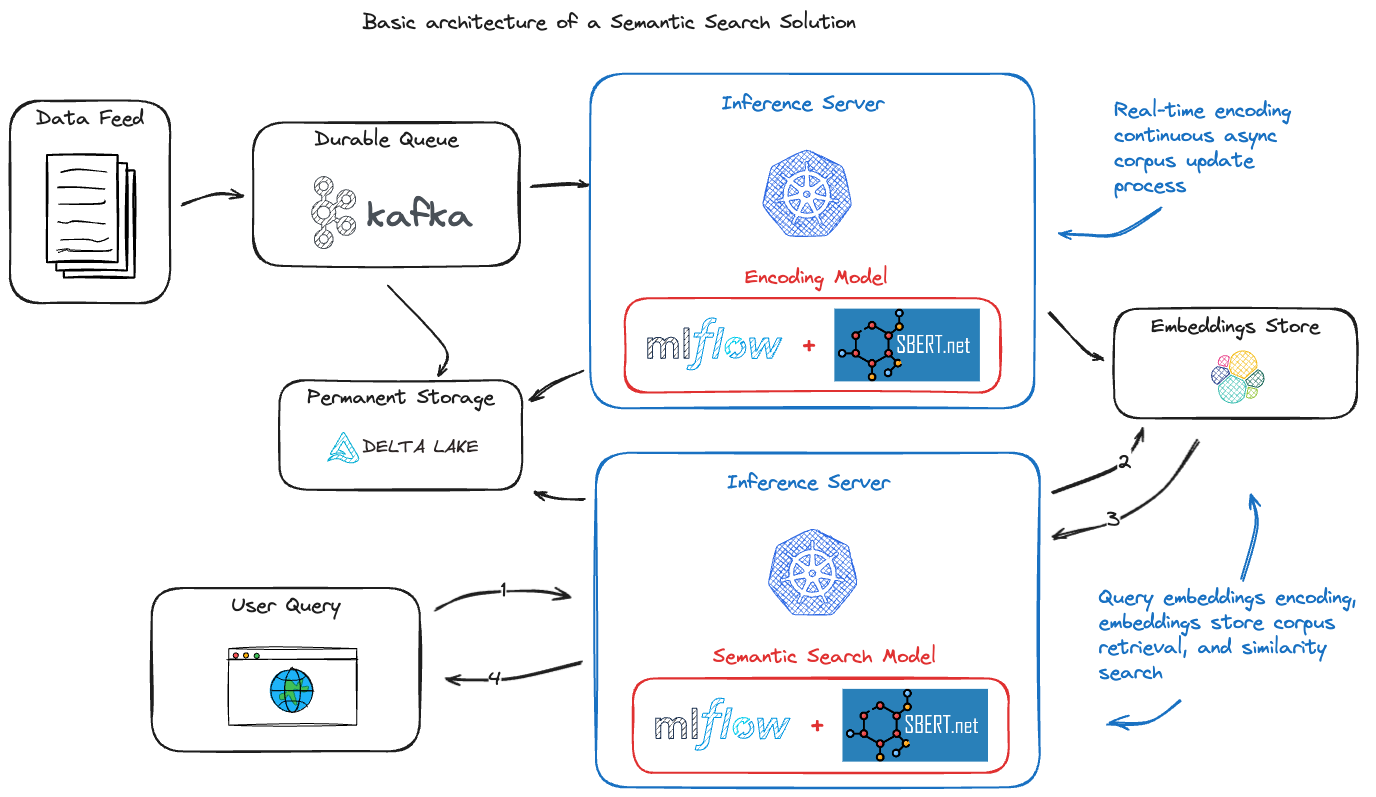

One of the more powerful applications that can be built with these tools is a semantic search engine. By using readily available open source tooling, you can build a semantic search engine that can find the most semantically similar sentences in a corpus for a given query. This is a significant improvement over traditional keyword-based search engines, which are limited in their ability to understand the context of a query.

An example high-level architecture for such an application stack is shown below:

Deployment Made Easy

Once a model is trained, it needs to be deployed for inference. MLflow’s integration with Sentence Transformers simplifies this by providing

functions such as mlflow.sentence_transformers.load_model() and mlflow.pyfunc.load_model(), which allow for easy model serving.

You can read more about deploying models with MLflow, find further information on

using the deployments API, and starting a local model serving endpoint to get a

deeper understanding of the deployment options that MLflow has available.

Getting Started with the MLflow Sentence Transformers Flavor - Tutorials and Guides

Below, you will find a number of guides that focus on different ways that you can leverage the power of the sentence-transformers library, leveraging MLflow’s APIs for tracking and inference capabilities.

Detailed Documentation

To learn more about the details of the MLflow flavor for sentence transformers, delve into the comprehensive guide below.

View the Comprehensive GuideLearning More About Sentence Transformers

Sentence Transformers is a versatile framework for computing dense vector representations of sentences, paragraphs, and images. Based on transformer networks like BERT, RoBERTa, and XLM-RoBERTa, it offers state-of-the-art performance across various tasks. The framework is designed for easy use and customization, making it suitable for a wide range of applications in natural language processing and beyond.

For those interested in delving deeper into Sentence Transformers, the following resources are invaluable:

Official Documentation and Source code

Official Documentation: For a comprehensive guide to getting started, advanced usage, and API references, visit the Sentence Transformers Documentation.

GitHub Repository: The Sentence Transformers GitHub repository is the primary source for the latest code, examples, and updates. Here, you can also report issues, contribute to the project, or explore how the community is using and extending the framework.

Official Guides and Tutorials for Sentence Transformers

Training Custom Models: The framework supports fine-tuning of custom embedding models to achieve the best performance on specific tasks.

Publications and Research: To understand the scientific foundations of Sentence Transformers, the publications section offers a collection of research papers that have been integrated into the framework.

Application Examples: Explore a variety of application examples demonstrating the practical use of Sentence Transformers in different scenarios.

Library Resources

PyPI Package: The PyPI page for Sentence Transformers provides information on installation, version history, and package dependencies.

Conda Forge Package: For users preferring Conda as their package manager, the Conda Forge page for Sentence Transformers is the go-to resource for installation and package details.

Pretrained Models: Sentence Transformers offers an extensive range of pretrained models optimized for various languages and tasks. These models can be easily integrated into your projects.

Sentence Transformers is continually evolving, with regular updates and additions to its capabilities. Whether you’re a researcher, developer, or enthusiast in the field of natural language processing, these resources will help you make the most of this powerful tool.