MLflow Transformers Flavor

Attention

The transformers flavor is in active development and is marked as Experimental. Public APIs may change and new features are

subject to be added as additional functionality is brought to the flavor.

Introduction

Transformers by 🤗 Hugging Face represents a cornerstone in the realm of machine learning, offering state-of-the-art capabilities for a multitude of frameworks including PyTorch, TensorFlow, and JAX. This library has become the de facto standard for natural language processing (NLP) and audio transcription processing. It also provides a compelling and advanced set of options for computer vision and multimodal AI tasks. Transformers achieves all of this by providing pre-trained models and accessible high-level APIs that are not only powerful but also versatile and easy to implement.

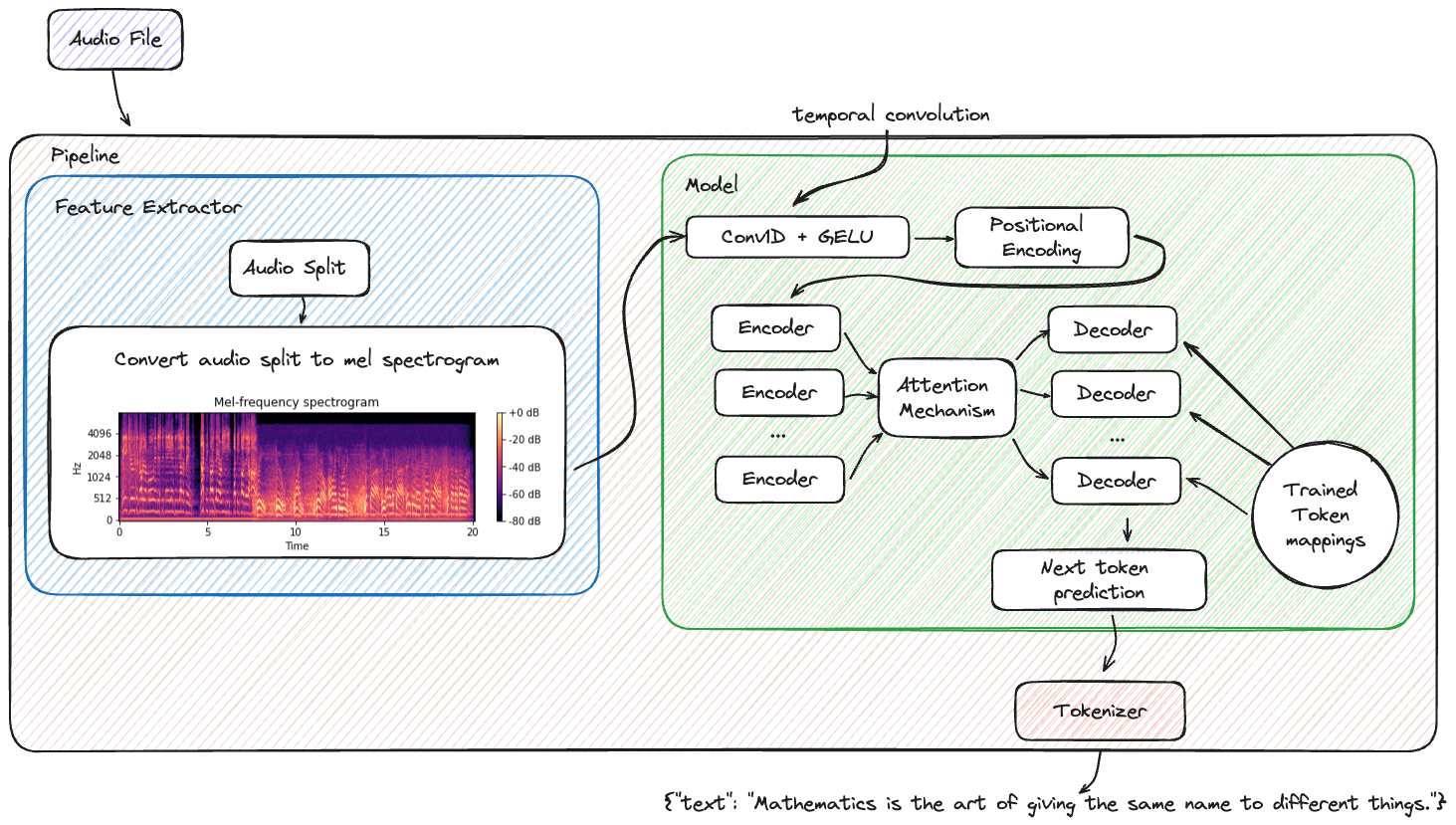

For instance, one of the cornerstones of the simplicity of the transformers library is the pipeline API, an encapsulation of the most common NLP tasks into a single API call. This API allows users to perform a variety of tasks based on the specified task without having to worry about the underlying model or the preprocessing steps.

The integration of the Transformers library with MLflow enhances the management of machine learning workflows, from experiment tracking to model deployment. This combination offers a robust and efficient pathway for incorporating advanced NLP and AI capabilities into your applications.

Key Features of the Transformers Library:

Access to Pre-trained Models: A vast collection of pre-trained models for various tasks, minimizing training time and resources.

Task Versatility: Support for multiple modalities including text, image, and speech processing tasks.

Framework Interoperability: Compatibility with PyTorch, TensorFlow, JAX, ONNX, and TorchScript.

Community Support: An active community for collaboration and support, accessible via forums and the Hugging Face Hub.

MLflow’s Transformers Flavor:

MLflow supports the use of the Transformers package by providing:

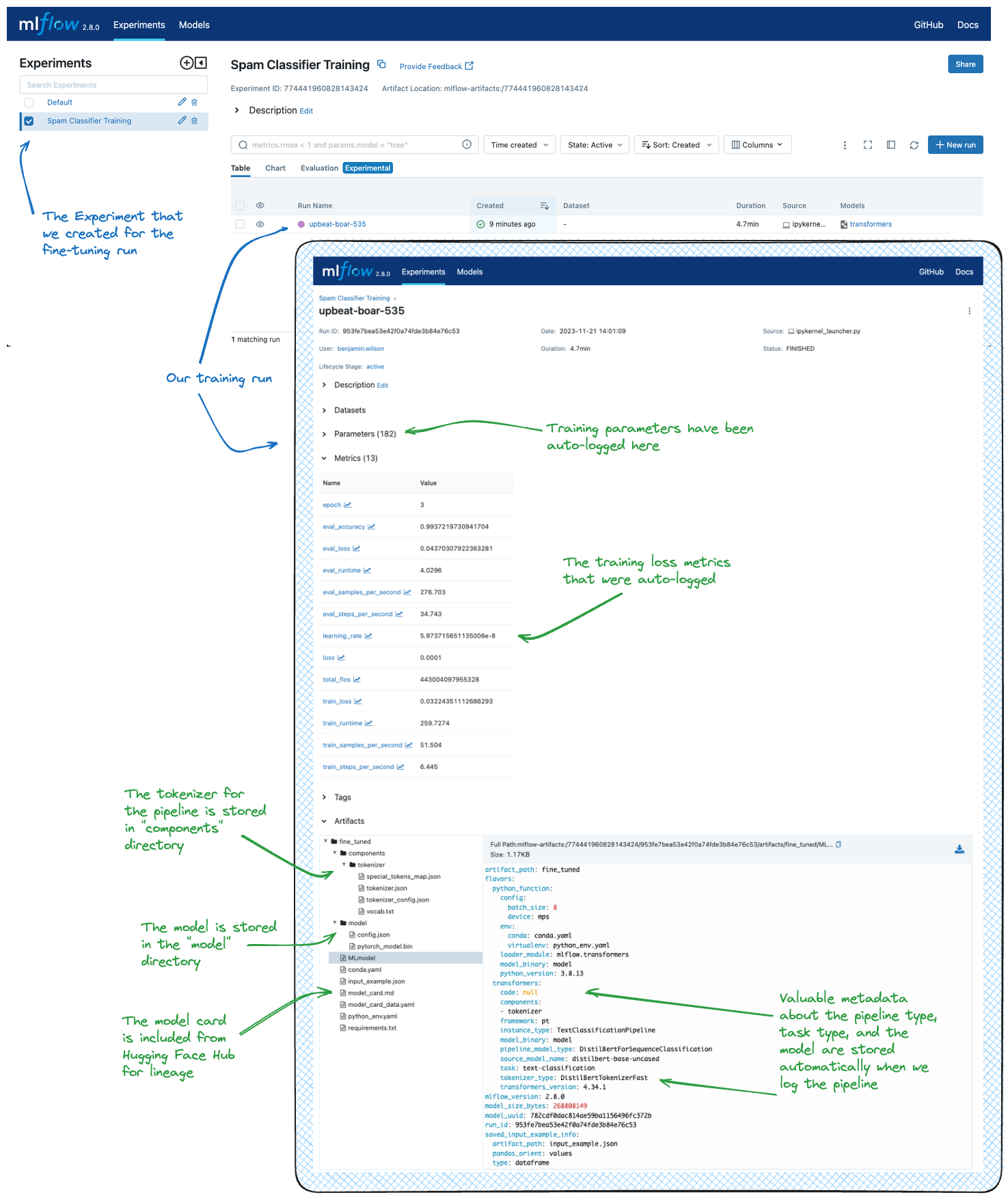

Simplified Experiment Tracking: Efficient logging of parameters, metrics, and models during the fine-tuning process.

Effortless Model Deployment: Streamlined deployment to various production environments.

Library Integration: Integration with HuggingFace libraries like Accelerate, PEFT for model optimization.

Prompt Management: Save prompt templates with transformers pipelines to optimize inference with less boilerplate.

Example Use Case:

For an illustration of fine-tuning a model and logging the results with MLflow, refer to the fine-tuning tutorials. These tutorial demonstrate the process of fine-tuning a pretrained foundational model into the application-specific model such as a spam classifier, SQL generator. MLflow plays a pivotal role in tracking the fine-tuning process, including datasets, hyperparameters, performance metrics, and the final model artifacts. The image below shows the result of the tutorial within the MLflow UI.

Deployment Made Easy

Once a model is trained, it needs to be deployed for inference.

MLflow’s integration with Transformers simplifies this by providing functions such as mlflow.transformers.load_model() and

mlflow.pyfunc.load_model(), which allow for easy model serving.

As part of the feature support for enhanced inference with transformers, MLflow provides mechanisms to enable the use of inference

arguments that can reduce the computational overhead and lower the memory requirements

for deployment.

Getting Started with the MLflow Transformers Flavor - Tutorials and Guides

Below, you will find a number of guides that focus on different use cases using transformers that leverage MLflow’s APIs for tracking and inference capabilities.

Introductory Quickstart to using Transformers with MLflow

If this is your first exposure to transformers or use transformers extensively but are new to MLflow, this is a great place to start.

Transformers Fine-Tuning Tutorials with MLflow

Fine-tuning a model is a common task in machine learning workflows. These tutorials are designed to showcase how to fine-tune a model using the transformers library with harnessing MLflow’s APIs for tracking experiment configurations and results.

Use Case Tutorials for Transformers with MLflow

Interested in learning about how to leverage transformers for tasks other than basic text generation? Want to learn more about the breadth of problems that you can solve with transformers and MLflow?

These more advanced tutorials are designed to showcase different applications of the transformers model architecture and how to leverage MLflow to track and deploy these models.

Important Details to be aware of with the transformers flavor

When working with the transformers flavor in MLflow, there are several important considerations to keep in mind:

Experimental Status: The Transformers flavor in MLflow is marked as experimental, which means that APIs are subject to change, and new features may be added over time with potentially breaking changes.

PyFunc Limitations: Not all output from a Transformers pipeline may be captured when using the python_function flavor. For example, if additional references or scores are required from the output, the native implementation should be used instead. Also not all the pipeline types are supported for pyfunc. Please refer to Loading a Transformers Model as a Python Function for the supported pipeline types and their input and output format.

Supported Pipeline Types: Not all Transformers pipeline types are currently supported for use with the python_function flavor. In particular, new model architectures may not be supported until the transformers library has a designated pipeline type in its supported pipeline implementations.

Input and Output Types: The input and output types for the python_function implementation may differ from those expected from the native pipeline. Users need to ensure compatibility with their data processing workflows.

Model Configuration: When saving or logging models, the model_config can be used to set certain parameters. However, if both model_config and a ModelSignature with parameters are saved, the default parameters in ModelSignature will override those in model_config.

Audio and Vision Models: Audio and text-based large language models are supported for use with pyfunc, while other types like computer vision and multi-modal models are only supported for native type loading.

Prompt Templates: Prompt templating is currently supported for a few pipeline types. For a full list of supported pipelines, and more information about the feature, see this link.

Logging Large Models

By default, MLflow consumes certain memory footprint and storage space for logging models. This can be a concern when working with large foundational models with billions of parameters. To address this, MLflow provides a few optimization techniques to reduce resource consumption during logging and speed up the logging process. Please refer to the Working with Large Models in MLflow Transformers flavor guide to learn more about these tips.

Working with tasks for Transformer Pipelines

In MLflow Transformers flavor, task plays a crucial role in determining the input and output format of the model. Please refer to the Tasks in MLflow Transformers guide on how to use the native Transformers task types, and leverage the advanced tasks such as llm/v1/chat and llm/v1/completions for OpenAI-compatible inference.

Detailed Documentation

To learn more about the nuances of the transformers flavor in MLflow, delve into the comprehensive guide, which covers:

Pipelines vs. Component Logging: Explore the different approaches for saving model components or complete pipelines and understand the nuances of loading these models for various use cases.

Transformers Model as a Python Function : Familiarize yourself with the various

transformerspipeline types compatible with the pyfunc model flavor. Understand the standardization of input and output formats in the pyfunc model implementation for the flavor, ensuring seamless integration with JSON and Pandas DataFrames.Prompt Template: Learn how to save a prompt template with transformers pipelines to optimize inference with less boilerplate.

Model Config and Model Signature Params for Inference: Learn how to leverage

model_configandModelSignaturefor flexible and customized model loading and inference.Automatic Metadata and ModelCard Logging: Discover the automatic logging features for model cards and other metadata, enhancing model documentation and transparency.

Model Signature Inference : Learn about MLflow’s capability within the

transformersflavor to automatically infer and attach model signatures, facilitating easier model deployment.Overriding Pytorch dtype : Gain insights into optimizing

transformersmodels for inference, focusing on memory optimization and data type configurations.Input Data Types for Audio Pipelines: Understand the specific requirements for handling audio data in transformers pipelines, including the handling of different input types like str, bytes, and np.ndarray.

PEFT Models in MLflow Transformers flavor: PEFT (Parameter-Efficient Fine-Tuning) is natively supported in MLflow, enabling various optimization techniques like LoRA, QLoRA, and more for reducing fine-tuning cost significantly. Check out the guide and tutorials to learn more about how to leverage PEFT with MLflow.

Learn more about Transformers

Interested in learning more about how to leverage transformers for your machine learning workflows?

🤗 Hugging Face has a fantastic NLP course. Check it out and see how to leverage Transformers, Datasets, Tokenizers, and Accelerate.