New Features

Looking to learn about new significant releases in MLflow?

Find out about the details of major features, changes, and deprecations below.

infer_code_path option when logging a model will determine which additional code modules are needed, ensuring the consistency between the training environment and production.

The MLflow evaluate API now supports fully customizable system prompts to create entirely novel evaluation metrics for GenAI use cases.

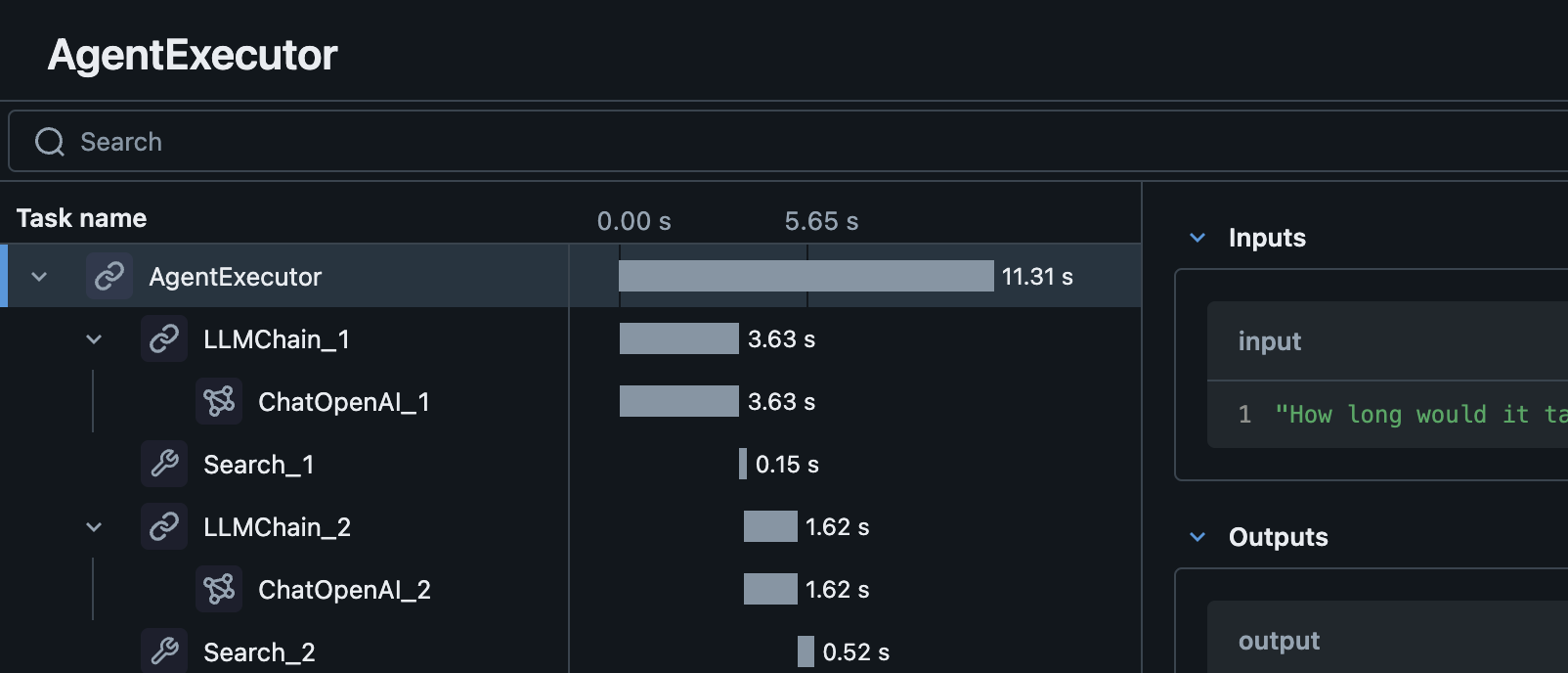

LangChain models and custom Python Models now support a predict_stream API, allowing for generator return types for streaming outputs.

The LangChain flavor in MLflow now supports defining a model as a code file to simplify logging and loading of LangChain models.

MLflow now supports asynchronous artifact logging, allowing for faster and more efficient logging of models with many artifacts.

The transformers flavor has received standardization support for embedding models.

Embedding models now return a standard llm/v1/embeddings output format to conform to OpenAI embedding response structures.

The transformers flavor in MLflow has gotten a significant feature overhaul.

- All supported pipeline types can now be logged without restriction

- Pipelines using foundation models can now be logged without copying the large model weights

OpenAI-compatible chat models are now easier than ever to build in MLflow! ChatModel is a new Pyfunc subclass that makes it easy to deploy and serve chat models with MLflow.

Check out the new tutorial on building an OpenAI-compatible chat model using TinyLlama-1.1B-Chat!